- 09 July 2020

- Imagination Technologies

With modern advancements in automotive safety, we are seeing advanced driving assistance systems (ADAS) such as surround-view become the new standard in modern cars. This demonstration shows how this application can be enhanced with the addition of accurate real-time reflections and AI to detect hazards that make cars safer. When this is combined with the new safety mechanisms in our new XS GPU family, it is clear why our GPUs are considered the safest in the world.

First, let’s start with the graphics problem. Have you ever looked at an existing surround-view system and thought “Why does the car look like something out of a PS1 game? – i.e. flat, dull shading. Why doesn’t it look shiny and cool and more real – and why can’t I see the surroundings?”

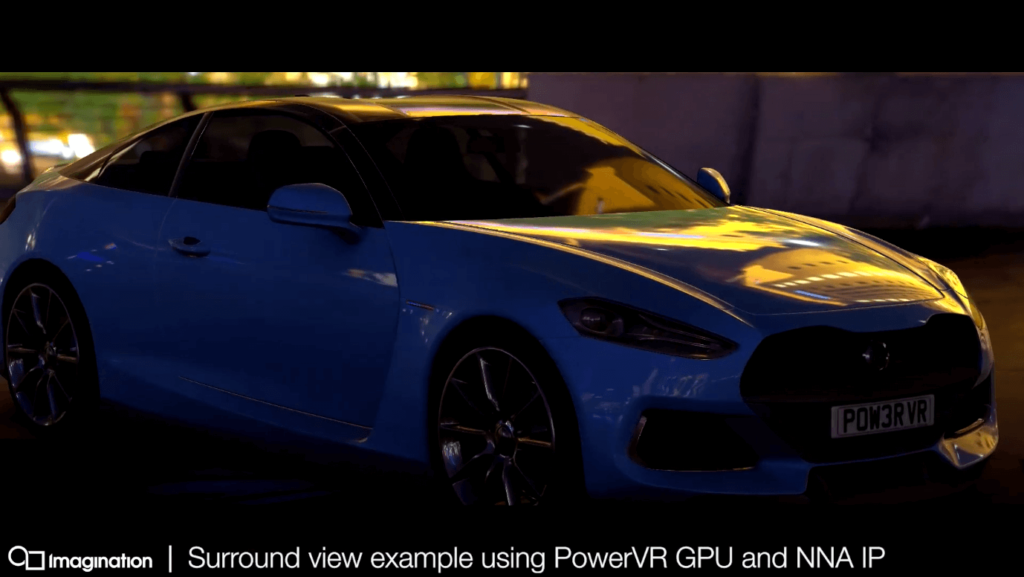

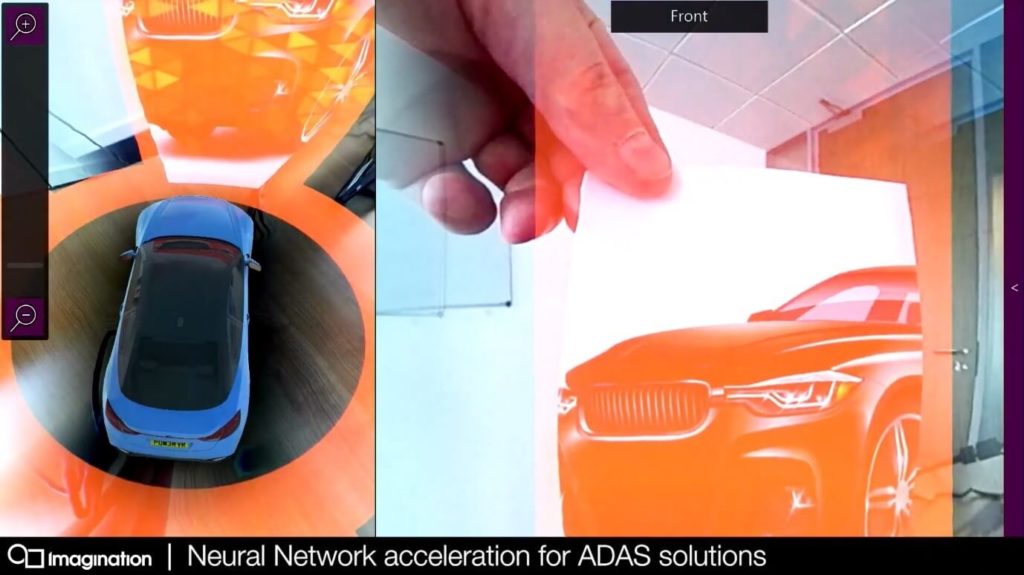

Rest assured, we have a created an innovative solution that pushes the envelope of both visual quality and accuracy for an automotive application. Below is a screenshot from our smart surround-view demonstration that shows soft lighting and real-time reflections mapped onto the car from the surrounding environment, features which are currently non-existent in any surround-view application on the market.

To be clear, what this means is that not only does the driver sees a high-quality realistic 3D model of the vehicle, but its actual surroundings are mapped onto the car – in real time. As such, the driver gets accurate feedback about their surroundings, making the surround-view feature even safer and more capable.

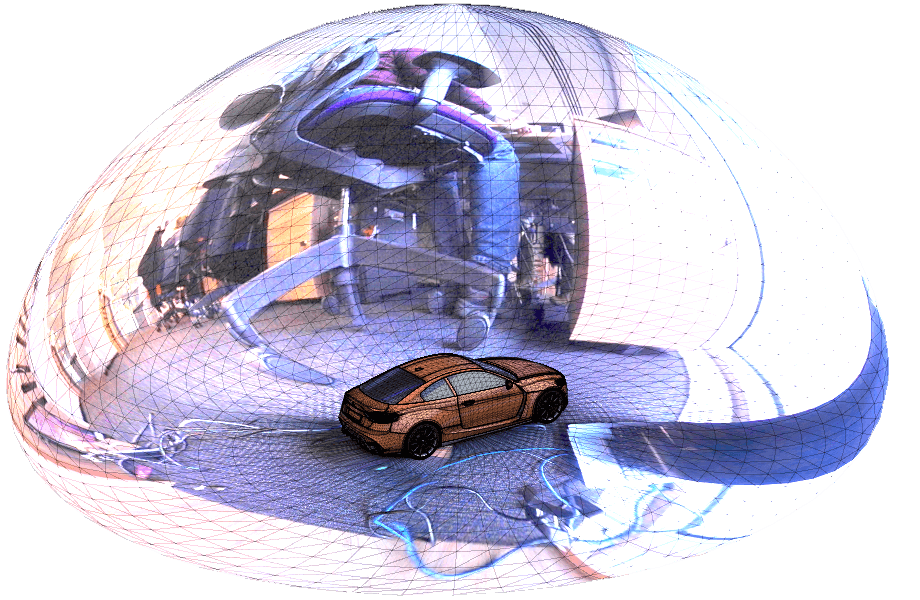

How is this all done I hear you ask? First, the car rig captures four feeds from the cameras simultaneously, stitches them together and maps them to a hemisphere. This provides a top-down view of the car and the surroundings, which enables the driver to park their car safely and effectively. We are then able to use this hemisphere to calculate real-time reflections, that adapt to the car’s surroundings and map them onto the model of the car for an extra layer of safety, whilst truly immersing the car and driver within the environment.

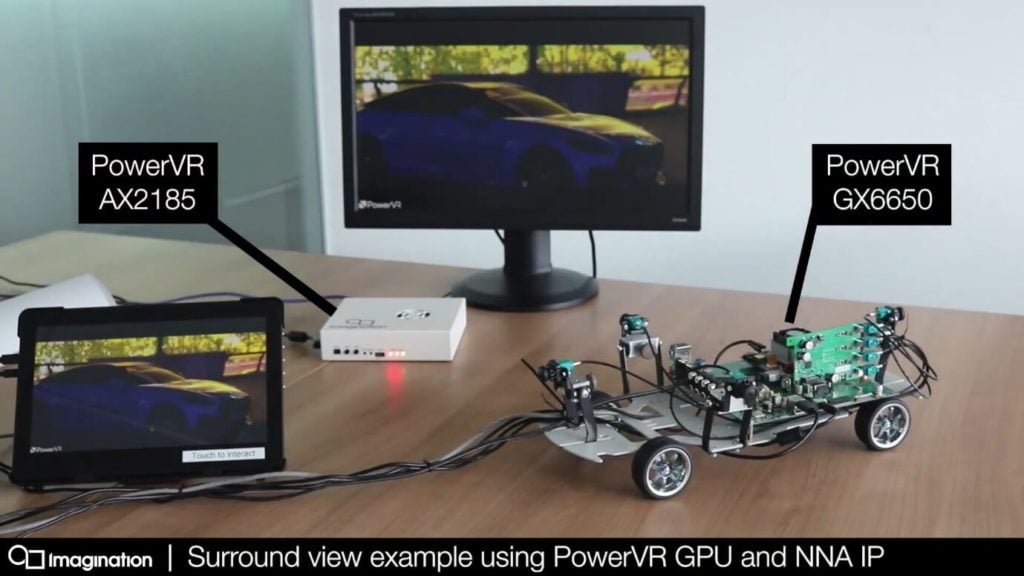

The application in this demo runs on a relatively modest PowerVR GX6650 GPU inside the Renesas R-Car H3. When you pair this up with our dedicated neural-network accelerator (NNA), the PowerVR Series2NX AX2185, you start to see the benefits of our “AI Synergy”, where we offload complex neural network processing from the GPU onto our NNA, whilst increasing the compute utilisation of the GPU.

This car rendering and camera stitching are performed on the GPU. A snapshot of the environment hemisphere is passed to the NNA, which then processes the image and detects objects and hazards. Once the detection pass has been completed the detected hazards are then fed back to the R-Car H3 and are composited on top of the hemisphere.

The hazards are detected using a neural network which is trained on a specific image set – in this case, we are using the GoogleNet Single Shot Detector (SSD) to detect people, cars and many other objects. This is only scratching the surface of what could be achieved on the AI side as we edge closer to seeing autonomous vehicles becoming the new standard. Neural networks will be pivotal in this as they can be used to enhance a range of ADAS systems such as driver monitoring and road-sign recognition.

With the introduction of our XS family of GPU for automotive, Imagination offers a range of functionally-safe GPUs with unique safety mechanisms that can be combined with powerful NNAs to deliver faster smarter applications across the board.

Let us know what you think of this demo in the comments and to keep up to date with everything related to Imagination you can follow us on Twitter @ImaginationTech.