- 29 July 2013

- Imagination Technologies

Over the years, our close partnership with MediaTek has resulted in the release of some very innovative platforms that have set important benchmarks for high-end gaming, smooth UIs and advanced browser-based graphics-rich applications in smartphones, tablets and other mobile devices. Two recent examples include:

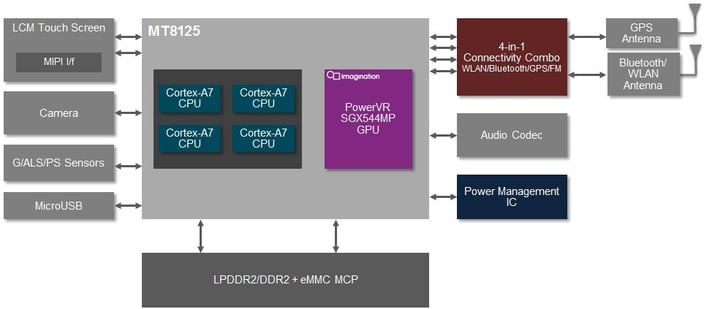

- MT8125/MT8389, an extension of MediaTek’s highly successful quad-core portfolio which integrates power-efficient PowerVR Series5XT graphics that deliver compelling multimedia features and sophisticated user experiences

- MT8135, the first in a series of PowerVR Series6-based mobile chipsets that are due to be announced in the second half of this year

MediaTek has been steadily establishing itself as an important global player for consumer products like smartphones, tablets and smart TVs, with a strong foothold in Latin America and Asia, and a rapidly growing presence in Europe and North America. Earlier this year, MediaTek introduced MT8125, one of their most successful tablet chipsets for high-end multimedia capabilities.

The MT8125 application processor from MediaTek has a PowerVR Series5XT GPU

While MT8125 has been extremely popular with OEMs including Asus, Acer or Lenovo, MT8135 has the potential to consolidate Mediatek’s existing customer base and open up exciting new opportunities thanks to the advanced feature set provided by Imagination’s PowerVR ‘Rogue’ architecture.

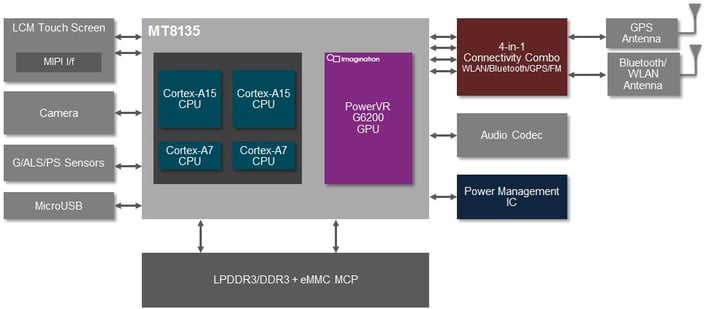

MT8135 is a quad-core SoC that aims for the middle- to high-end tier of the tablet OEM market. It supports a 4-in-1 connectivity package that includes Wi-Fi, Bluetooth 4.0, GPS and FM radio, all developed in-house by MediaTek. Miracast is another important addition to the multimedia package, enabling devices using MT8135 to stream high-resolution content more easily to compatible displays, over wireless networks.

The MT8135 application processor from MediaTek has a PowerVR Series6 GPU

MT8135 incorporates a PowerVR G6200 GPU from Imagination that enables advanced mobile graphics and compute applications for the mainstream consumer market, including fast gaming, 3D navigation and location-based services, camera vision, image processing, augmented reality applications, and smooth, high-resolution user interfaces.

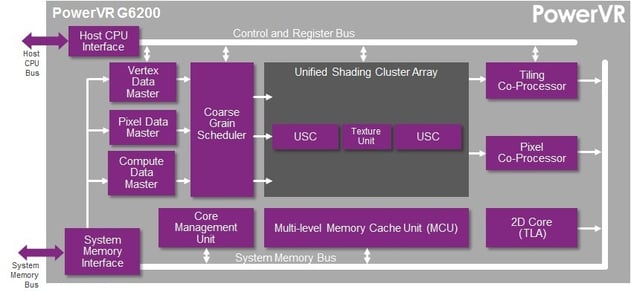

A block diagram of the PowerVR G6200 GPU core

A block diagram of the PowerVR G6200 GPU core

As MT8135-powered mobile devices start appearing in the market, developers will have access to new technologies and features introduced by our PowerVR Series6 family such as:

- our latest-generation tile based deferred rendering (TBDR) architecture implemented on universal scalable clusters (USC)

- high-efficiency compression technologies that reduce memory bandwidth requirements, including lossless geometry compression and PVRTC/PVRTC2 texture compression

- scalar processing to guarantee highest ALU utilization and easy programming

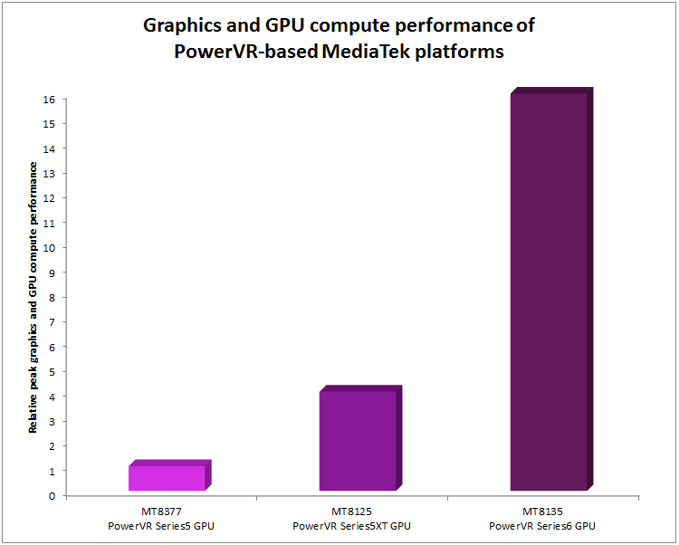

Thanks to the PowerVR G6200 GPU inside the MT8135 application processor, MediaTek brings high-quality, low-power graphics to unprecedented levels by delivering up to four times more ALU horsepower compared to MT8125, its PowerVR Series5XT-based predecessor. PowerVR G6200 fully supports a wide range of graphics APIs including OpenGL ES 1.1, 2.0 and 3.0, OpenGL 3.x, and DirectX 10_0, along with compute programming interfaces such as OpenCL 1.x, Renderscript and Filterscript. Future PowerVR GPUs will be able to scale up to OpenGL 4.x and DirectX 11_2.

A comparison of peak graphics and GPU compute performance for MediaTek MT8377, MT8125 and MT8135 processors (relative to a normalized frequency)

By partnering up with Imagination, MediaTek has access to our industry-leading PowerVR graphics, worldwide technical support, and a strong ecosystem of Android developers capable of making the most of our technology. We look forward to shortly seeing our brand-new PowerVR Series6 GPUs in the hands of millions of consumers, and see MediaTek as one of our strategic partners for our latest generation PowerVR GPUs moving forward.

For more news and announcements from our ecosystem and silicon partners, follow MediaTek (@MediaTek) and Imagination (@ImaginationTech) on Twitter and keep coming back to our blog.