- 24 February 2014

- Imagination Technologies

It’s been a few weeks since we introduced the ground-breaking PowerVR Series6XT GPU family and already we’ve seen amazing interest in the new line-up of GPUs from our customers and ecosystem. On our blog, our most avid readers have been eager for us to reveal how competitive our newly-released GPUs are compared to other mobile graphics parts announced at CES 2014.

All Rogue GPUs contain anywhere from half a cluster to eight; this scalability enables our customers to target the requirements of a growing range of demanding markets from entry level mobile to the highest performance embedded graphics including premium smartphones, tablets, console, automotive and home entertainment.

This article is meant to provide an overview of PowerVR GX6650, the shining star of the PowerVR Series6XT family and the most powerful GPU IP core available today.

You want cores and GFLOPS? PowerVR GX6650 has plenty.

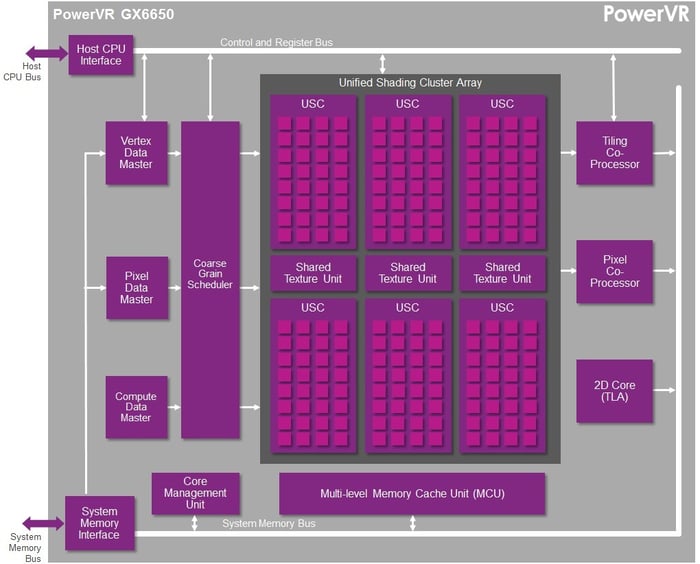

PowerVR GX6650 has six Unified Shading Clusters (USCs) and 192 cores, making it our fastest GPU to date. If you want to understand how we define a core and get an idea of how the USC works.

A block diagram of the PowerVR GX6650 GPU shows the six clusters and 192 cores

Additionally, PowerVR GX6650 gives developers the flexibility to choose the most appropriate precision path for their application, making it the undisputed leader for low-power performance. For example, GX6650 includes a low-power, high-performance FP16 mode which can provide up to twice the performance of FP32 within a constrained power budget. We’ve improved the performance of this FP16 mode because FP32 is not always the best choice for optimum performance with power efficiency.

PowerVR GX6650 offers the best pixel throughput available

Another major advantage of our PowerVR GX6650 GPU is its massive pixel throughput rate. It is able to process 12 pixels per clock, which is up to triple the number of our competitors.

Pixel throughput is a metric which tends to be overlooked in favor of GFLOPS but is equally important when thinking about high resolution displays. Since PowerVR GX6650 is aimed at processors targeting high-end, high-resolution tablets or 4K smart TVs, pixel throughput becomes a hot topic, especially when you have FPS-heavy gaming or complex user interfaces.

Add to that the fact that PowerVR GX6650 has an up to 50% higher texture rate compared to its direct competitors, and you quickly begin to understand that the front-end of PowerVR Series6XT GPUs is a beast.

PowerGearing G6XT and PVR3C keep power consumption in check

Now that we’ve answered your performance-related questions, let’s look more closely at probably the biggest factor which influences mobile graphics: power consumption.

When it comes to power efficiency, PowerVR is and has always been ahead of the pack. PowerVR GX6650 includes all the latest power-saving technologies from Imagination, including PVR3C and PowerGearing G6XT.

PowerGearing G6XT is a set of power management mechanisms that lead to sustained performance within fixed power and thermal envelopes. PowerGearing for PowerVR GX6650 enables us to control all GPU resources, allowing dynamic and demand-based scheduling of the six shading clusters and other processing blocks.

PowerGearing G6XT offers advanced power management for best in class efficiency

PVR3C is a suite of triple compression formats that includes PVRTC and ASTC for texture compression, PVRIC for frame buffer compression and PVRGC geometry compression. Whereas competing solutions have only started implementing these concepts, PowerVR GX6650 implements our second generation PVR3C solutions, with optimized algorithms for all of the use cases that matter in mobile (gaming, web browsing, UIs and many more).

PVRIC works particularly well on high-resolution home screens found in modern mobile operating systems, yet still finds a great compression rate in more traditional dynamic gaming situations

Beyond the core count lies the best feature set available for mobile

The mobile industry has little in common with the desktop market which explains why desktop-class, brute-force GPUs have always been, at best, a niche solution. The same consideration applies to proprietary APIs which can be popular as niche solutions on desktops but are not the way forward in mobile, which has thrived on standards like OpenGL ES and OpenCL EP.

There is a reason why Khronos has created desktop and embedded profiles for both graphics and compute; it allows GPU companies to create solutions that are optimized for those specific markets and their specific requirements. It is true that you can offer various extensions through which you can enable certain unique features – this is something we’ve done very successfully over the years; but, fundamentally, if you are targeting mobile devices, you want to make sure your GPU is optimized for the right use cases and fully supports mobile API standards.

Our new Soft Kitty OpenGL ES 3.0 demo highlights the performance of high-end PowerVR Rogue GPUs

Success in mobile is not just a question of attaching the biggest number to one metric of a GPU: it requires a balanced approach which delivers a blend of performance, efficiency and features. PowerVR GX6650 offers uncompromised support for OpenGL ES 3.0, OpenCL 1.2 EP, Direct3D 11 Levels 9_3/10_0, OpenGL 3.x, OpenCL, and Renderscript, plus future-proofing for upcoming mobile APIs but without the burden of advanced desktop features which cannot be used because no mobile API exists to expose them to developers.

In conclusion, PowerVR GX6650 shows that our TBDR graphics architecture is still the leader and is set to remain in that position for the long term.

What do you think about this high-end GPU? Let us know in the comment box below. Make sure to follow us on Twitter (@ImaginationTech) for the latest news and updates from Imagination.