- 24 January 2019

- Benny Har-Even

Introducing PowerVR Series3NX

Things move fast in the world of semiconductor IP and our award-winning PowerVR Series2NX neural network accelerator, (or NNA, as we like to call it) already has a successor – the PowerVR Series3NX. This offers several enhancements over its predecessor, with more performance and new features in class-leading silicon area.

Welcome to the real world

Before we delve into the detail of its improvements, let’s remind ourselves of some of the incredible uses that embedded AI offers and how it is impacting our world. AI will touch almost every conceivable market, from IoT, consumer, automotive to mobile, industry, security and agriculture: all will be enhanced and revolutionised by the power of deep learning and neural networks.

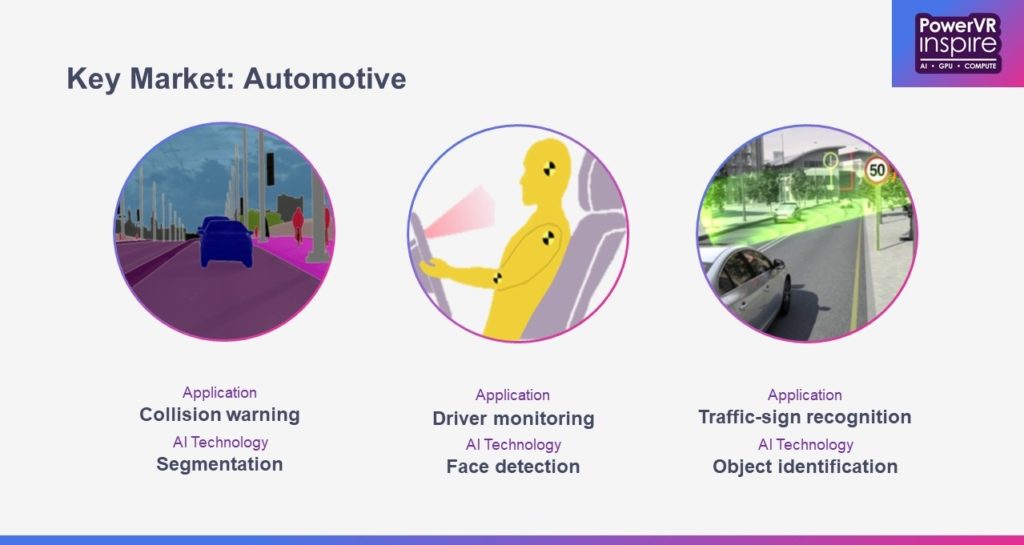

One of the most widely recognised computer-vision use-cases today is driverless cars. To make a car ‘see’ there are a range of technologies to choose from, such as radar, lidar, infrared, thermal and CCD-based cameras, and as the latter tend to be the cheapest option, most autonomous cars are likely to be equipped with many of them. However, ‘seeing’ isn’t a problem – what is more challenging is ‘understanding’. Whichever technology is used, there will be multiple data points coming in, so using neural networks for sensor fusion will be an area that is set to grow in importance over the next year.

Many large companies, both tech giants and traditional car companies are working on the driverless car problem, but so too are small start-ups such as this MIT spin-off called iSee, which this Technology Review article describes as making, “spectacular progress… thanks to deep learning, a technique that employs vast data-hungry neural networks.”

Autonomous vehicles aren’t just confined to the road. Machine learning algorithms are widely used in agriculture and are enabling huge robotic combine harvesters to analyse the wheat as it’s cut (literally separating the wheat from the chaff) and using computer vision to only spray weed killer on weeds rather than crops, making the process more efficient, more environmentally friendly and more cost-effective.

Then there are more left-field examples, such as these two I came across recently, both of which fall under the bracket of ‘computer vision’. The first is a 3D vision system that enables farmers to monitor pigs to prevent incidents of ‘tail biting’. Rather unpleasantly, it seems that sometimes pigs bite other pig’s tails without warning, which not only can be very painful for them can also cause infection, thus spoiling the meat and resulting in an economic loss for the farmer. By analysing the pig’s behaviour the vision system can quickly detect and potentially prevent these incidents before they occur.

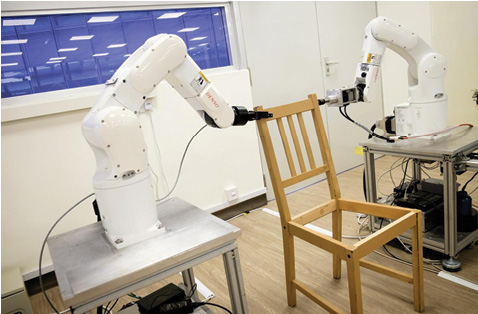

The other example is a case where researchers have created robot’s arms that can assemble a chair from IKEA (presumably, they can ignore the instructions, so they don’t even have to worry about them not making sense). OK, it’s not quite the robot butlers we were reliably informed would be working for us by now, but it’s a step in the right direction, so we’ll take it.

Of course, there are myriad other use cases for neural networks in embedded devices, such as face identification for mobile or smart cameras, gesture recognition for TVs and image enhancement, particularly through upscaling, where detail must be added to the picture based only on the existing pixel information.

What’s needed for all these use cases, is fast, efficient hardware acceleration of neural networks.

Introducing PowerVR Series3NX: our next-generation neural network accelerator

The PowerVR Series3NX is the newest iteration of our dedicated hardware design for neural network inferencing – as in, accelerating inferencing based on the models that have already been trained to identify something specific. Thanks to our ecosystem of specialist tools and APIs we can make these run optimally on our specialised hardware.

There are three main highlights to the PowerVR Series3NX:

- Five new single cores

- Improved power, performance, area

- New features

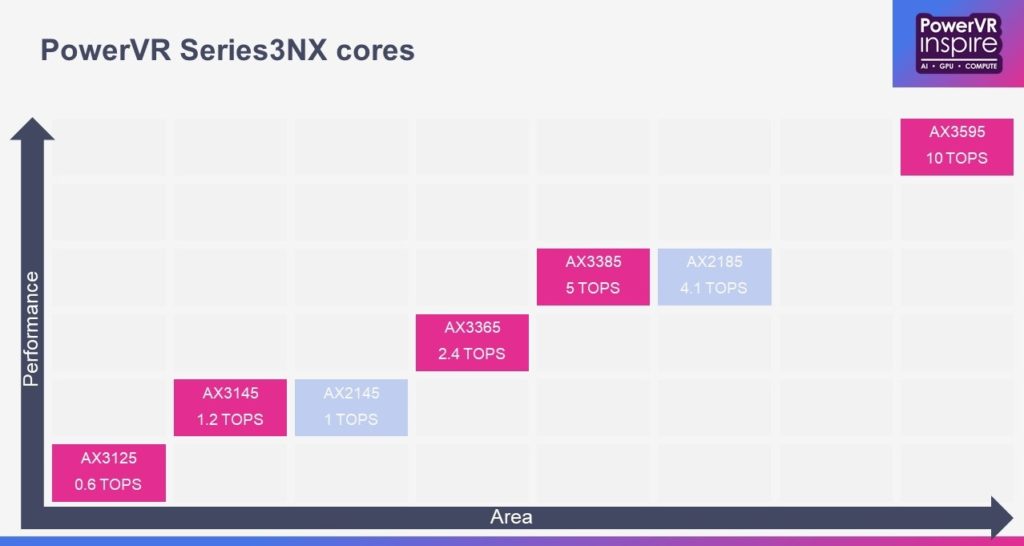

Our previous generation design, the PowerVR Series2NX, consisted of two cores; the small PowerVR AX2145, offering one tera operations per second (TOPS) of performance and the larger PowerVR AX2185, delivering 4.1 TOPS. By contrast, the new PowerVR AX3145 delivers 1.2 TOPS, and the PowerVR AX3385 5 TOPS – and crucially both do so in a smaller silicon area than the previous generation, which means reduced power consumption and lower costs for SoC manufacturers.

As you can see from the graph below the product offering is also filled out with the PowerVR AX3125, a very small core offering 0.6 TOPS, for where minimal area is a critical, and low power consumption is a priority such as in battery-powered or energy harvested IoT applications. For example, the kinetic energy created by opening and closing a door could be enough to turn on the camera, take a snapshot and perform inference locally – with no need to ever replace a battery or provide mains power.

The PowerVR AX3595 delivers an impressive 10 TOPS, while at 2.4 TOPS the PowerVR AX3365 offers a balance between performance and area.

Overall, the PowerVR Series3NX provides even more for less, with a 40% power reduction, and a 40% increase in inferences per second in the same area over the previous generation.

Keeping the weight down

In neural networks, the ‘weights’ determine the contribution that a particular feature makes in creating an output. These weights account for a significant portion of the how much space the network will occupy in memory and with the PowerVR Series3NX, we have added lossless weight compression. This compression reduces the network model size that needs to be stored and passed through system memory, which means Series3NX offers a very useful overall bandwidth reduction of 35% over Series2NX, delivering greater power savings in an SoC.

All of this is on top of an architecture that already offers bandwidth savings through support for flexible bit-depth on a per-layer basis, making it possible to run lower bit precision, while maintaining inference accuracy and therefore providing comparatively smaller network models.

Neural networks at scale

While the PowerVR AX3595 offers an impressive 10 TOPS in a single core there are use cases where very high performance is required. The PowerVR Series3NX can now scale to offer 20, 40, 80 or even 160 TOPS, through the use of four multi-core offerings. These deliver greatly increased performance but are still optimised for power-constrained embedded devices.

Therefore, the PowerVR Series3NX enables true next-generation AI functionality by meeting the very high compute demands of applications such as autonomous driving.

Safe and secure

PowerVR Series3NX also brings with it new security features such as support for industry-standard security models. These are offered in four different configurations:

- Unsecured: Enables the fastest performance without any overhead.

- Protected model: The model, weights and intermediate data are secured, necessary when a trained model with great commercial value is loaded onto the NNA, such as in a security camera.

- Protected Content: The input, output and intermediate data are all protected, necessary when video needs to have DRM to ensure it cannot be stolen – for example in a set-top box

- Fully secure: The model, weights, intermediate data, input data and output data are all protected, e.g. for a face authentication app or payment related app.

Compute SDK

However, the story does not end there. Later this year we will introduce a brand-new Compute SDK, bringing everything together for developers to create their neural network applications and port them to the PowerVR Series 3NX – and it will include support for SYCL.

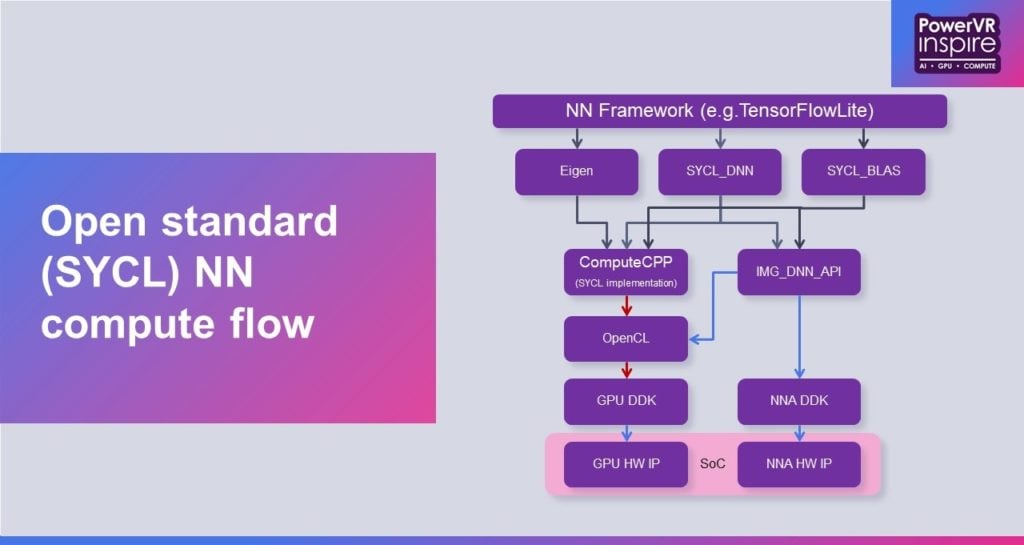

SYCL is a Khronos group specification that is designed for heterogeneous compute. This enables developers to use C++ to program any device that is supported by OpenCL, or a neural network specific API. Imagination has mapped each SYCL library function to our proprietary IMG DNN API, thus providing a non-proprietary open-standards programming environment for the whole industry.

The beauty is that it’s a royalty-free, cross-platform abstraction layer that enables SoC manufacturers to unlock their hardware’s potential for developers using the most widely known programming language. It means that popular neural network frameworks, such as TensorFlow, can be compiled natively to Imagination’s PowerVR Series3NX hardware.

Imagination continues to work with the Edinburgh-based software tools developer Codeplay to make this a reality.

A comprehensive solution

PowerVR Series3NX is a powerful follow up to our successful Series2NX; an optimised hardware solution that runs neural networks with best-in-class performance. With five new cores it enables SoC designers to hit a range of performance targets, from 0.6 up to 10 TOPS with a single core solution, while the new multi-core offerings mean it can deliver up to 160 TOPS.

It all proves that when it comes to selecting a neural network accelerator for your SoC, or to target for development, it pays to separate that wheat from the chaff.