- 15 June 2012

- Imagination Technologies

“If you were ploughing a field, which would you rather use: two strong oxen or 1024 chickens?” Seymour Cray, the father of supercomputing

GPU compute refers to the current trend of using cores aimed at rendering graphics to perform computational tasks usually handled by the CPU and has had a major impact on the way programmers develop their applications.

The concept implies using GPUs and CPUs together in modern SoCs with the sequential part of the application running on the CPU while the data-parallel, computational-intensive side, which is often more substantial, is handled by the GPU. The momentum for embracing the GPU compute model has been rapidly picking up as some experts have predicted that GPU compute is likely to increase its current capabilities by 500x, while ‘pure’ CPU capacities will progress by a limited 10x.

This enables graphics processors to achieve tremendous computational performance and maintain power efficiency while at the same time offering the end-user an incredible overall system speedup that is transparent, seamless and easy to achieve.

The Need for Speed: a Crash Course in GPU compute APIs

Able to access the hardware solutions but lacking the software support, applications initially attempted to match the feature set of traditional graphics APIs like OpenGL. This proved to be somewhat inefficient and thus a number of solutions have started to appear for the GPU compute programming problem.

Developments in dedicated multi-threaded languages such as OpenCL™ (driven by Apple at first, but now a widely adopted Khronos standard), DirectX 11.1 (enabling access to the DirectCompute technology) and C for CUDA (Compute Unified Device Architecture) have been driven by key semiconductor and software companies to become a tangible reality. In the high performance workstation market, there are FireStream and CUDA-compliant products, although neither of those standards has been ported to the embedded space.

The success of this approach was such that the industry started looking at FLOPS (FLoating point Operations Per Second) instead of CPU frequency, when comparing a computing system’s overall speed.

The Usual Suspects

Imagination’s PowerVR graphics technologies support all the main APIs now in use for GPU computing which are presently getting wider deployment, particularly in desktop products, but also in embedded systems.

Thanks to the USSE™ (Universal Scalable Shader Engine) present in the PowerVR SGX™ Series5 graphics IP cores and its updated sibling, USSE2™, which arrived with PowerVR Series5XT, Imagination was able to become an early adopter of OpenCL. Both products are currently available on the market, having advanced capabilities such as round-to-nearest in floating point mathematics, full 32-bit integer support and 64-bit integer emulation.

These features that enable GPU computing have been already integrated in several popular platforms that can be found in most of the mobile phones and tablets. By offering the possibility to combine up to sixteen PowerVR SGX cores on a chip, Imagination is able to deliver performance on par with discrete GPU vendors, while still retaining an unrivalled power, area and bandwidth efficiency. As power consumption increases super-linearly with frequency, the PowerVR SGX family achieves high parallelism at low clock frequencies therefore enabling programmers to write efficient applications that can benefit from the OpenCL mobile API ecosystem. This enables advanced applications and parallel computing for imaging and graphics solutions.

Here is an example of how PowerVR GPUs can improve both the overall performance and power efficiency of a mobile platform.

PowerVR OpenCL demonstration on the TI OMAP 4 platform

The Godfather, part 6: PowerVR Series6 GPUs

The newly launched PowerVR Series6 IP cores address the problem of achieving optimal general purpose computational throughput while taking into account memory latency and power efficiency. This revolutionary family of GPUs is designed to integrate the graphics and compute functionalities together, optimizing interoperation between the two, both at hardware and software driver levels.

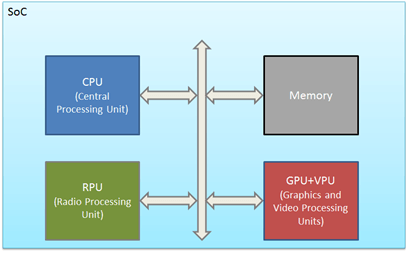

Another very important aspect of Power VR Series6’s GPU compute capabilities lies in how the graphics core can dramatically improve the overall system performance by offloading the CPU. The new family of GPUs offers a multi-tasking, multi-threaded engine with maximal utilization via a scalar/wide SIMD execution model for maximal compute efficiency and ensures true scalability in performance, as the industry is sending a clear message that the CPU-GPU relationship is no longer based on a master-slave model but on a peer-to-peer communication mechanism.

A heterogeneous computing system

With its design targeting efficiency in the mobile space, the CPU is fundamentally a sequential processor. Therefore, it cannot handle intensive data-plane processing without quickly becoming overloaded and virtually stalling the whole system. As a result, computing architectures need to become heterogeneous systems, with true parallel-core GPUs, like the PowerVR Series6 IP graphics cores, working together with multi-core CPUs and other processing units within the system.

There is an ever-expanding variety of use cases where GPU computing based on PowerVR graphics cores brings great benefits. Examples include imaging processing (stabilization, correction, improvement, or face detection and beautification tools), multimedia (real-time stabilization, information extraction or superimposition of information), computer vision (augmented reality, edge and feature detection) and general gaming, if the applications are written with the right approach in mind.